When moving their workload to the cloud, companies must rethink their backup concepts and strategies. Reuse existing on-premise backup solutions, switch to cloud-native backup features, rely on a new cloud-ready 3rd party software, or even contract a backup service provider? The world is full of opportunities. The key is understanding what remains the same in the cloud – and what changes.

Formulating the Requirements

The cloud does not change any backup requirements; cloud providers only complicate or simplify backup implementations. The three well-known dimensions of functional requirements for backups stay the same:

- Recovery Time Objective (RTO), i.e., the duration needed to restore the data from a backup

- Recovery Point Objective (RPO), i.e., how much data is maximum lost in case a backup has to be restored

- Backup location, i.e., the physical location where the backup resides.

The location aspect covers two dimensions. The first relates to data residency: In which jurisdictions are you allowed to store your backups, respectively, where do you have to keep a copy? In highly regulated sectors such as health or financial industries, regulators push companies and organizations to store the data in the regulator’s sphere of influence, aka the local country. When looking at data protection laws, they can restrict data transfers – including backup data – to other countries.

The second dimension of location reflects how far away geographically one keeps backups. A backup two kilometers away from the primary data center survives if the primary data center burns down. It does not help if a devastating flood destroys all buildings in a valley. So, clarifying which disasters your backup should survive is essential, too.

Finally, there is one aspect every backup product owner might want to stay away from, and that is archiving. Companies tend to move old data out of their operational systems and into an archive. Archiving is about understanding which data should be kept for how long when data can be deleted, and ensuring that data stored in old file formats remain accessible for ten years. Archiving is essential but orthogonal to ensuring that the organization has data backup to restore business operations after a blackout or earthquake.

Manageability of Backup Requirements

When hundreds or thousands of applications try to optimize the backup parameters to suit their needs optimally, just the discussions cost a fortune, plus there is the risk that nobody notices that some critical applications make wrong choices. Thus, larger IT organizations group applications with similar needs and relevance. “Top-10” applications or “Gold” versus “Silver” versus “Bronze” are sample names for service levels – for backups and beyond. A few simple categories ease understanding whether an application has the correct service level. One should never forget the cost impacts backups and service levels generally have. Maybe it is worth investing in having an online shop back operational within minutes. However, it is a waste of money to d the same for a Christmas card application allowing sales staff to order cards every year in the second week of November.

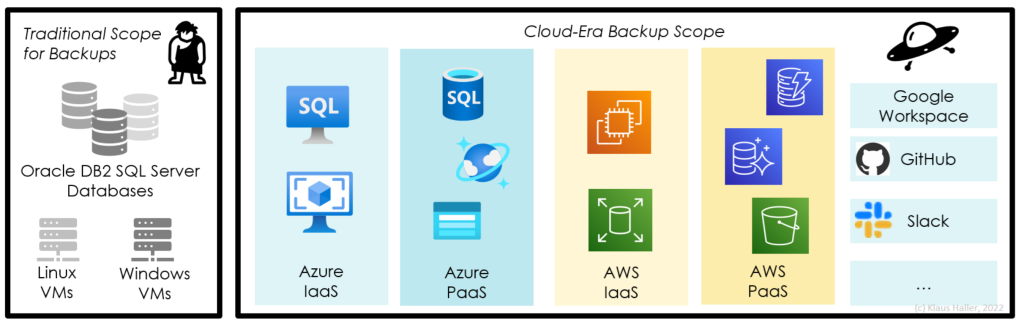

The new Challenge in the Cloud: Coverage

When companies only have IaaS workloads in the cloud, nothing changes. Backing up Linux or Windows VMs and DB2 or SQL servers does not change in the cloud. The challenge comes from platform-as-a-service (PaaS) services: Object storage is the new standard storage. Cloud providers provide file shares, serverless functions, and database-as-a-service services. The technical variety of sources to be backed up is higher than ever. And to add additional complexity: most companies rely not on just one cloud provider but on two and more, which all have slightly varying backup functionalities.

Finally, there are software-as-a-service solutions such as Google Workspace, O365, Salesforce, and many less-known ones. Companies must validate for each whether a service has a feature to export and externally backup the data and which kind of backups these vendors provide. A challenging task – and more than one company has to decide whether to accept insufficient backups or migrate to a different SaaS provider.

Innovative Cloud-native Backup Features

In the on-premise world, the 3-2-1 pattern was the golden backup rule: three backups, two media types, and one copy kept outside your organization. However, the cloud providers make two recent innovations a commodity in their cloud-native backup features: continuous backup and geo-redundancy. Cloud backup features are a big step forward for many cloud customers compared to their on-premise backups. First, storing backups on the other side of the globe requires, nowadays, just a click on the cloud portal. So, even the smallest company can afford it in the cloud. Second, continuous backups are available for many cloud services, allowing for point-in-time recovery. Do you want to restore the state yesterday at 21:23? Click here, and you are done – no need for the 3-2-1 pattern anymore.

In this new world, also simple backup tests are obsolete. Performing a backup of a VM and trying to see whether the restore works? Not needed anymore thanks to the superior integration of cloud-native backups in the cloud eco-system. Thus, companies can focus on testing the real challenges: restoring complete solutions consisting of a mix of database types and IaaS and PaaS services. Just say “hello” to the future of backups in the clouds!