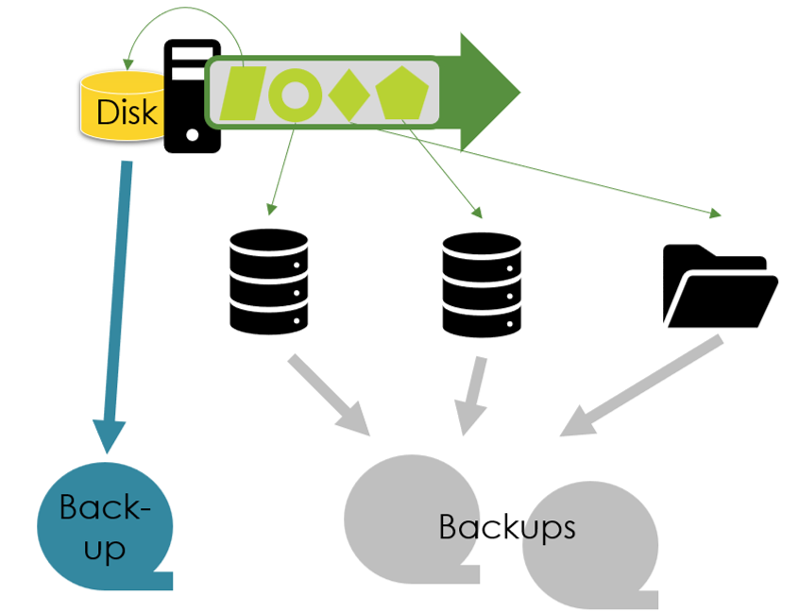

Application backups are not as simple as in the world of database lectures. Have you ever heard about the ACID properties with “A” representing “atomicity” and “D” durability? After a database commit, everything is on disk. Nothing can get lost. Plus, all commands within the transaction are on disk – or everything is undone. When a database crashes and restarts, its data reflects precisely the effects of all committed transactions. It works as designed if an application relies on exactly one database. Sadly, applications are more complex, as Figure 1 illustrates. They tend to access not only one database but several in parallel, plus write data to files and file shares – and disks attached to VMs.

Understanding Application-Consistent Backups and their Benefits

While private users can save their data by manually copying all their files to a different hard disk or the cloud, this approach is too simplistic for larger applications, even if we focus only on VM disks. If it is a large disk, files with names starting with “A” might get copied at 5:00 am and those starting with “Z” at 5:05 am. Thus, the “Z-files” could have been changed between 5:00 am and 5:05 am, making the A-files and the Z-files inconsistent. Furthermore, copying open files causes issues, changes might be only in the memory and not written to disk, or there could be pending I/O transactions. Thus, a clean-up of files and their data might be necessary when restarting the application using such file copies. The clean-up can be automated or a manual task for engineers. In any case, it prolongs application outages.

Application consistency overcomes this challenge. The idea is to perform backups such that applications run after restarting a VM without any clean-up actions. Thus, business-critical applications benefit from lower downtimes, i.e., they can provide better Recovery Time Objectives. Plus, the organization benefits in crisis events, aka business continuity management situations. When a company has to evacuate all workloads to a different cloud data center, the engineers can rely on the VMs to restart and applications to come up without manual intervention. The engineers can focus on fixing more complex issues, e.g., related to integrations with other components, rather than the complete IT department being stuck with cleaning up file systems.

The most prominent solution for application-consistent backups is Microsoft’s Windows Volume Shadow Copy Service (VSS). Microsoft products come with it, and your organization’s applications (and your third-party software provider) can also implement it for Windows workloads. The exact details of VSS are, however, not so relevant from a cloud security architecture perspective. What matters is the available features for application-consistent backups in AWS, GCP, and Azure.

Application-Consistent Backups in the Cloud

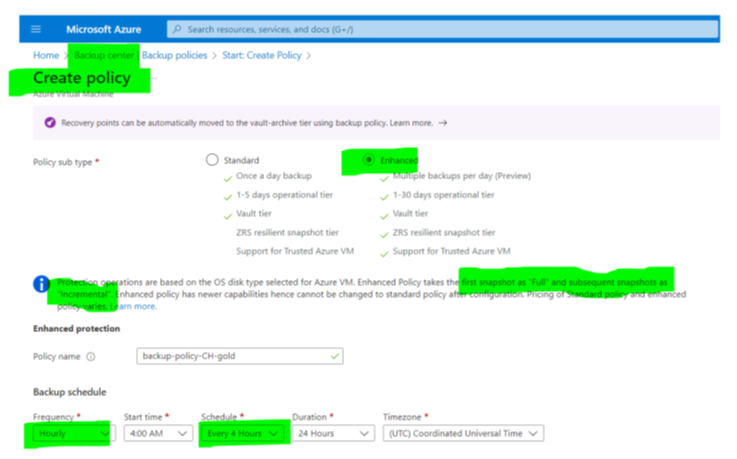

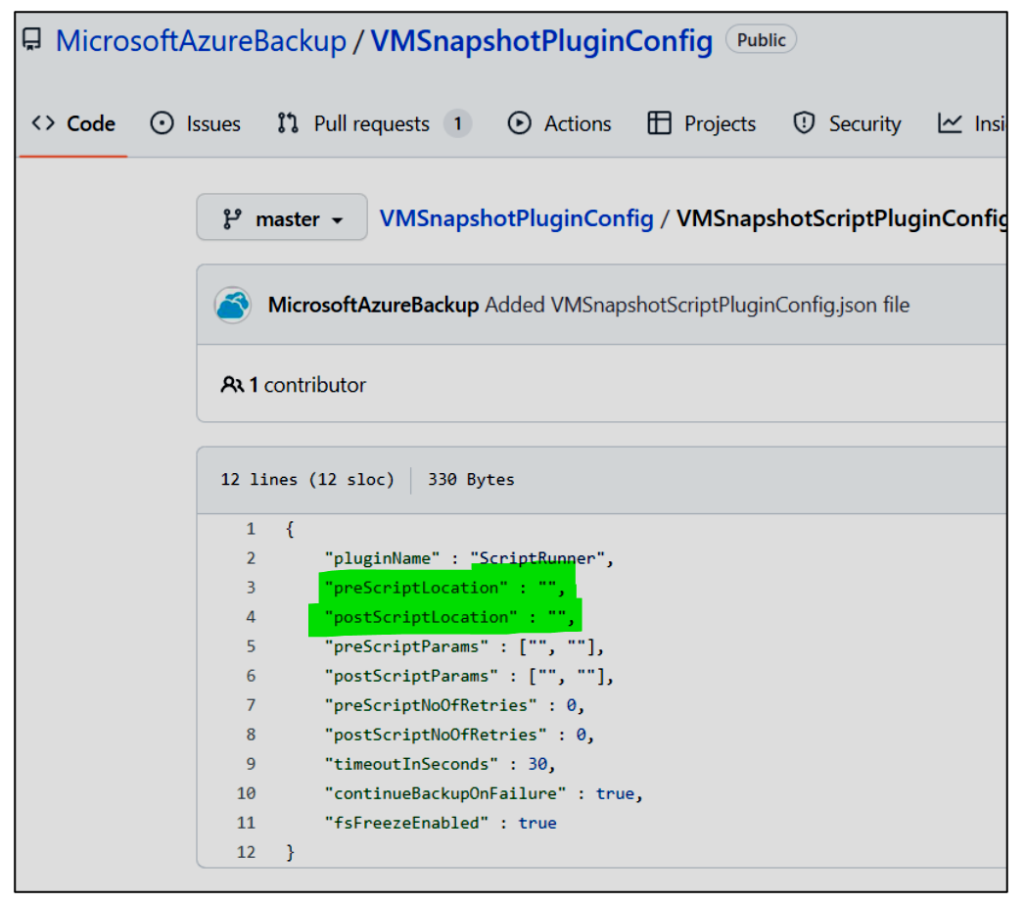

Azure comes with a solution for application-consistent backups for Linux VMs, at least for those deployed with the Azure Resource Manager and not the Service Manager. It is a framework enabling application developers or operations specialists to integrate pre- and post-scripts into Azure’s backup process. Pre-scripts can invoke, for example, APIs of the application to tell the application to finish off all “write” activities. Then, Azure performs the backup copy. Afterward, Azure invokes the post-script, and normal operations continue. For this purpose, a configuration file (Figure 2) must be on all relevant VMs.

The pre- and post-scripts and this configuration file are critical from a security perspective. They run with root privileges. Thus, they must be secured to prevent attackers having gained access to the VM to change these settings and execute malicious code as root.

The situation for Windows VMs on Azure is much easier compared to the Linux world. By default, all VM backups use the Microsoft VSS service. So, if (and only if!) the applications on the VM implement VSS, all backups are application-consistent without the need for extra VM configurations. If not, the disk backup is not application- but only file-consistent.

Finally, a quick remark on the AWS and the Google Cloud Platform (GCP) features. Both follow the same approach as Azure: pre- and post-scripts for Linux VMs, and Microsoft’s VSS for Windows VMs.

Back to the Big Picture

To conclude: Application-consistent backups reduce downtimes of applications by reducing the work for engineers after crashes or VM evacuations. However, the term application consistency can be misleading. When looking again at Figure 1, it is clear that the consistency between the VM disks and the database backups is not guaranteed. Applications have to cover the challenge that the VM disk backup is from 4:07 am, one database backup is from 4:05, and the second from 4:17. So, even with application-consistent backups, there are still exciting tasks and challenges for engineers in the area of backup and recovery!